Artificial Intelligence is no longer limited to cloud servers. Today, intelligent processing is shifting closer to data sources, driving massive growth in AI hardware for edge devices. From smart cameras and autonomous systems to industrial automation and healthcare equipment, edge AI is enabling faster decisions, lower latency, and enhanced data security.

At the core of this evolution is advanced System-on-Chip (SoC) development, which integrates computing, memory, connectivity, and AI acceleration into compact, power-efficient silicon platforms.

What Is AI Hardware for Edge Devices?

AI hardware for edge devices refers to specialized semiconductor solutions designed to perform AI inference and data processing locally, without relying on continuous cloud connectivity.

Common edge AI applications include:

- Smart surveillance and vision systems

- Robotics and autonomous vehicles

- Industrial IoT and predictive maintenance

- Medical imaging and diagnostics

- Consumer electronics and smart appliances

These applications demand low latency, real-time performance, and optimized power consumption — making SoC-based architectures the preferred choice.

Why SoC Development Is Essential for Edge AI

Traditional processors struggle to meet the performance and energy efficiency requirements of AI workloads at the edge. Modern SoC development addresses these challenges by integrating multiple processing engines into a single chip.

Key advantages of AI-enabled SoCs include:

- Reduced power consumption

- Faster AI inference

- Compact device design

- Improved thermal efficiency

- Optimized workload distribution

By combining CPUs, GPUs, NPUs, and dedicated accelerators, SoCs deliver scalable performance tailored for edge environments.

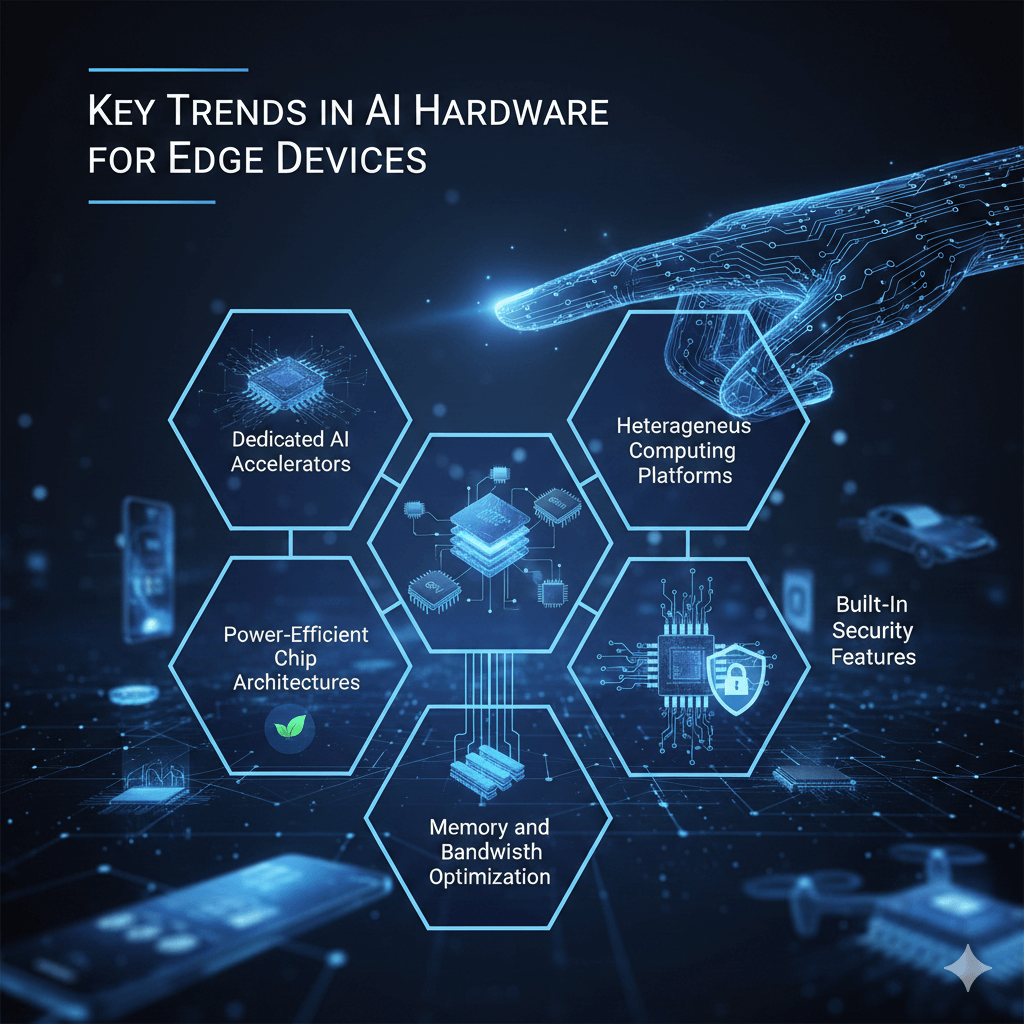

Key Trends in AI Hardware for Edge Devices

1. Dedicated AI Accelerators

Modern SoCs now include specialized Neural Processing Units (NPUs) to handle deep learning inference. These accelerators significantly improve performance while reducing energy consumption, making them ideal for real-time applications such as facial recognition and object detection.

2. Power-Efficient Chip Architectures

Energy efficiency is a top priority for edge AI hardware. Semiconductor designers are implementing:

- Advanced low-power design methodologies

- Dynamic voltage and frequency scaling

- Smaller process nodes such as 7nm and 5nm

- Intelligent power management techniques

These innovations help maximize performance per watt while extending device battery life.

3. Heterogeneous Computing Platforms

Edge AI workloads benefit from heterogeneous SoC architectures that combine:

- CPU cores for system control

- GPUs for parallel computation

- NPUs for AI inference

- DSPs for signal processing

This approach enables better task distribution and ensures real-time performance across multiple workloads.

4. Memory and Bandwidth Optimization

High-speed data movement is critical for AI performance. Modern AI hardware for edge devices is focusing on:

- On-chip SRAM expansion

- High-bandwidth memory interfaces

- Unified memory architectures

- Reduced memory access latency

These enhancements improve throughput and reduce dependency on external memory.

5. Built-In Security Features

Security is becoming a core requirement in edge AI applications. Today’s SoCs include hardware-level protection such as:

- Secure boot

- Hardware encryption engines

- Trusted execution environments

- Secure key storage

These features help protect sensitive data and ensure system integrity in connected environments.

Challenges in Edge AI SoC Development

Despite rapid advancements, several challenges remain:

- Balancing performance and power constraints

- Managing thermal limitations

- Supporting multiple AI frameworks

- Achieving faster time-to-market

- Ensuring scalability across applications

To address these challenges, semiconductor companies are adopting IP reuse strategies, modular SoC architectures, and advanced verification workflows.

Future of AI Hardware for Edge Devices

The next generation of edge AI hardware will focus on:

- Ultra-low-power AI chips

- Chiplet-based SoC designs

- Advanced packaging technologies

- AI-specific instruction sets

- Edge-to-cloud hybrid computing

These innovations will further accelerate adoption across automotive, healthcare, smart cities, and industrial automation sectors.

Conclusion: Partner with Silicon Patterns for Advanced Edge AI Solutions

As demand for AI hardware for edge devices continues to grow, companies need reliable semiconductor partners with deep expertise in SoC design, verification, validation, and post-silicon support.

Silicon Patterns offers end-to-end semiconductor engineering services that help organizations accelerate edge AI product development. With strong capabilities in design verification, physical design, DFT, validation, and system-level testing, Silicon Patterns enables faster time-to-market and high-quality silicon outcomes.

Whether you are developing AI-enabled SoCs for automotive, industrial IoT, or consumer electronics, Silicon Patterns provides scalable, cost-effective, and performance-driven solutions tailored to your business needs.

Empower your edge AI innovation with Silicon Patterns — where silicon meets intelligence.